What Is The SoftPlus Activation Function in C++ Neural Nets?

By Yilmaz Yoru November 24, 2021

What is SoftPlus Activation Function in ANN? How can we use SoftPlus Activation Function? Let’s recap what the details of activation functions and explain these terms.

или Зарегистрируйся.

By using this sum Net Input Function Valueand phi() activation functions, let’s see some of activation functions in C++; Now Let’s see how we can use SoftPlus Function with this example formula,

или Зарегистрируйся. Put simply, the Softplus function can be written as below,

According to their paper; the basic idea of the proposed class of functions, they replace the sigmoid of a sum by a product of Softplus or sigmoid functions over each of the dimensions (using the Softplus over the convex dimensions and the sigmoid over the others). They introduced the concept that new classes of functions similar to multi-layer neural networks have those properties.

Another study published by Xavier Glorot, Antoine Bordes, Yoshua Bengio, the full paper is titled “Deep Sparse Rectifier Neural Networks” which can be found Для просмотра ссылки Войдиили Зарегистрируйся. According to this study, in Artificial Neural Networks, while logistic sigmoid neurons are more biologically plausible than hyperbolic tangent neurons, the latter work better for training multi-layer neural networks.

According to this paper, “rectifying neurons are an even better model of biological neurons and yield equal or better performance than hyperbolic tangent networks in spite of the hard non-linearity and non-differentiability at zero, creating sparse representations with true zeros, which seem remarkably suitable for naturally sparse data. Even though they can take advantage of semi-supervised setups with extra-unlabeled data, deep rectifier networks can reach their best performance without requiring any unsupervised pre-training on purely supervised tasks with large labeled datasets. Hence, these results can be seen as a new milestone in the attempts at understanding the difficulty in training deep but purely supervised neural networks, and closing the performance gap between neural networks learned with and without unsupervised pre-training”, Ref: Для просмотра ссылки Войдиили Зарегистрируйся.

By Yilmaz Yoru November 24, 2021

What is SoftPlus Activation Function in ANN? How can we use SoftPlus Activation Function? Let’s recap what the details of activation functions and explain these terms.

What is an Activation function in Neural Networks?

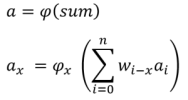

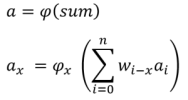

An Activation Function ( phi() ) also called as transfer function, or threshold function that determines the activation value ( a = phi(sum) ) from a given value (sum) from the Net Input Function. For the Net Input Function, in our context, the sum is a sum of signals in their weights, and the activation function is a new value of this sum with a given function or conditions. In other words, the activation function is a way to transfer the sum of all weighted signals to a new activation value of that signal. There are different activation functions, mostly Linear (Identity), bipolar and logistic (sigmoid) functions are used. The activation function and its types are explained well Для просмотра ссылки ВойдиCan you create an activation function in C++?

In C++ (in general in most Programming Languages) you can create your activation function. Note that sum is the result of Net Input Function which calculates the sum of all weighted signals. We will use some as a result of the input function. Here activation value of an artificial neuron (output value) can be written by the activation function as below,

By using this sum Net Input Function Valueand phi() activation functions, let’s see some of activation functions in C++; Now Let’s see how we can use SoftPlus Function with this example formula,

What is a SoftPlus Activation Function?

The SoftPlus Activation Function is developed and published by Dugas et al in 2001. The full paper can be found Для просмотра ссылки Войди

C++:

f(x) = log( 1+exp(x) );Another study published by Xavier Glorot, Antoine Bordes, Yoshua Bengio, the full paper is titled “Deep Sparse Rectifier Neural Networks” which can be found Для просмотра ссылки Войди

According to this paper, “rectifying neurons are an even better model of biological neurons and yield equal or better performance than hyperbolic tangent networks in spite of the hard non-linearity and non-differentiability at zero, creating sparse representations with true zeros, which seem remarkably suitable for naturally sparse data. Even though they can take advantage of semi-supervised setups with extra-unlabeled data, deep rectifier networks can reach their best performance without requiring any unsupervised pre-training on purely supervised tasks with large labeled datasets. Hence, these results can be seen as a new milestone in the attempts at understanding the difficulty in training deep but purely supervised neural networks, and closing the performance gap between neural networks learned with and without unsupervised pre-training”, Ref: Для просмотра ссылки Войди

How can write a Softplus activation in C++?

A SoftPlus activation function in C++ can be written as below:

C++:

double phi(double sum)

{

return ( std::log( 1+ exp(sum) ) ); // SoftPlus Function

}Is there a simple C++ ANN example with a SoftPlus Activation Function?

C++:

#include <iostream>

#define NN 2 // number of neurons

class Tneuron // neuron class

{

public:

double a; // activity of each neurons

double w[NN+1]; // weight of links between each neurons

Tneuron()

{

a=0;

for(int i=0; i<=NN; i++) w[i]=-1; // if weight is negative there is no link

}

// let's define an activation function (or threshold) for the output neuron

double phi(double sum)

{

return ( std::log( 1+ exp(sum) ) ); // SoftPlus Function

}

};

Tneuron ne[NN+1]; // neuron objects

void fire(int nn)

{

float sum = 0;

for ( int j=0; j<=NN; j++ )

{

if( ne[j].w[nn]>=0 ) sum += ne[j].a*ne[j].w[nn];

}

ne[nn].a = ne[nn].activation_function(sum);

}

int main()

{

//let's define activity of two input neurons (a0, a1) and one output neuron (a2)

ne[0].a = 0.0;

ne[1].a = 1.0;

ne[2].a = 0;

//let's define weights of signals comes from two input neurons to output neuron (0 to 2 and 1 to 2)

ne[0].w[2] = 0.3;

ne[1].w[2] = 0.2;

// Let's fire our artificial neuron activity, output will be

fire(2);

printf("%10.6f\n", ne[2].a);

getchar();

return 0;

}