What Is An Identity Activation Function in Neural Networks?

By Yilmaz Yoru November 10, 2021

Do you want to learn what is simplest activation function in neural networks? What is an Identity Function? What do we need to know about Activation Functions and Transfer Functions as an AI term? Is an Activation Function the same with a net Input Function? Is there a possibility to use Net Input Function as an Activation Function? What does any of this even mean?

или Зарегистрируйся).

There are many other definitions about the same as above. In addition to the AI term, we should add these terms too.

или Зарегистрируйся is the study of computer Для просмотра ссылки Войди или Зарегистрируйся that improve automatically through experience. While it is common to see advertisements which say “Smart” or “uses AI”, in reality, there is no genuine authentic AI yet – or not in the strictest definition of the term. We call all AI-related things as AI Technology. AI, based on its dictionary definition may be happening with Для просмотра ссылки Войди или Зарегистрируйся also called as Strong AI. There is also Artificial Biological Intelligence (ABI) term that attempts to emulate ‘natural’ intelligence.

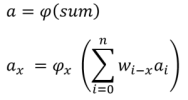

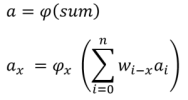

The Activation Function ( phi() ) also called as transfer function, or threshold function that determines the activation value ( a = phi(sum) ) from a given value (sum) from the Net Input Function . Net Input Function, here the sum is a sum of signals in their weights, and activation function is a new value of this sum with a given function or conditions. In other words, the activation function is a way to transfer the sum of all weighted signals to a new activation value of that signal. There are different types of activation functions, mostly Linear (Identity), bipolar and logistic (sigmoid) functions are used. The activation function and its types are explained well Для просмотра ссылки Войдиили Зарегистрируйся.

In C++ (and, in general, in most Programming Languages) you can create your activation function. Note that sum is the result of the Net Input Function which calculates the sum of all weighted signals. We will use some as a result of the input function. Here the activation value of an artificial neuron (output value) can be written by the activation function as below,

By using this sum Net Input Function Value and phi() activation functions, let’s see some of activation functions in C++;

или Зарегистрируйся, also called an Identity Relation or Identity Map or Identity Transformation, is a function in mathematics that always returns the same value that was used as its argument. We can briefly say that it is a y=x function or f(x) = x function. This function can be also used as a activation function in some AI applications.

This is a very simple Activation Function which is also an Identity Function,

return value of this function should be floating number ( float, double, long double) because of weights are generally between 0 and 1.0,

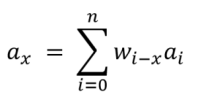

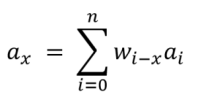

As you see in Identity function Activation Function is same as Equal to Identification Function. So in this kind of networks activation value can be directly written as follows without using phi(),

By Yilmaz Yoru November 10, 2021

Do you want to learn what is simplest activation function in neural networks? What is an Identity Function? What do we need to know about Activation Functions and Transfer Functions as an AI term? Is an Activation Function the same with a net Input Function? Is there a possibility to use Net Input Function as an Activation Function? What does any of this even mean?

What do we mean by “artificial intelligence”?

Artificial Intelligence, also called AI refers to the simulation of human intelligence in machines that are programmed to think like humans and mimic their actions. The term may also be applied to any machine that exhibits traits associated with a human mind such as learning and problem-solving. (reference: Для просмотра ссылки ВойдиThere are many other definitions about the same as above. In addition to the AI term, we should add these terms too.

Is machine learning (ML) different from artificial intelligence (AI)?

Для просмотра ссылки ВойдиWhat is the role of the activation function in how minimum artificial neurons work?

A Minimum Artificial Neuron has an activation value (a), an activation function ( phi() ) and weighted (w) input net links. So it has one activation value, one activation function and one or more weights depend on the number of its input nets.The Activation Function ( phi() ) also called as transfer function, or threshold function that determines the activation value ( a = phi(sum) ) from a given value (sum) from the Net Input Function . Net Input Function, here the sum is a sum of signals in their weights, and activation function is a new value of this sum with a given function or conditions. In other words, the activation function is a way to transfer the sum of all weighted signals to a new activation value of that signal. There are different types of activation functions, mostly Linear (Identity), bipolar and logistic (sigmoid) functions are used. The activation function and its types are explained well Для просмотра ссылки Войди

In C++ (and, in general, in most Programming Languages) you can create your activation function. Note that sum is the result of the Net Input Function which calculates the sum of all weighted signals. We will use some as a result of the input function. Here the activation value of an artificial neuron (output value) can be written by the activation function as below,

By using this sum Net Input Function Value and phi() activation functions, let’s see some of activation functions in C++;

What is the Identity Function ( y = x ) ?

An Для просмотра ссылки ВойдиThis is a very simple Activation Function which is also an Identity Function,

C++:

float phi(float sum)

{

return sum ; // Identity Function, linear transfer function f(sum)=sum

}As you see in Identity function Activation Function is same as Equal to Identification Function. So in this kind of networks activation value can be directly written as follows without using phi(),

Is there an example of using an Identity Function in C++?

Here is the full example about how to use Identity Function in C++,

C++:

#include <iostream>

#define NN 2 // number of neurons

class Tneuron // neuron class

{

public:

float a; // activity of each neurons

float w[NN+1]; // weight of links between each neurons

Tneuron()

{

a=0;

for(int i=0; i<=NN; i++) w[i]=-1; // if weight is negative there is no link

}

// let's define an activation function (or threshold) for the output neuron

float activation_function(float sum)

{

return sum ; // Identity function f(sum) = sum

}

};

Tneuron ne[NN+1]; // neuron objects

void fire(int nn)

{

float sum = 0;

for ( int j=0; j<=NN; j++ )

{

if( ne[j].w[nn]>=0 ) sum += ne[j].a*ne[j].w[nn];

}

ne[nn].a = ne[nn].activation_function(sum);

}

int main()

{

//let's define activity of two input neurons (a0, a1) and one output neuron (a2)

ne[0].a = 0.0;

ne[1].a = 1.0;

ne[2].a = 0;

//let's define weights of signals comes from two input neurons to output neuron (0 to 2 and 1 to 2)

ne[0].w[2] = 0.3;

ne[1].w[2] = 0.2;

// Let's fire our artificial neuron activity, output will be

fire(2);

printf("%10.6f\n", ne[2].a);

getchar();

return 0;

}