What Is The Hyperbolic Tangent Activation ANN Function?

By Yilmaz Yoru December 21, 2021

What is a hyperbolic Tangent function? How can we use Hyperbolic Tangent Function in C++? Let’s answer these questions.

или Зарегистрируйся.

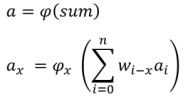

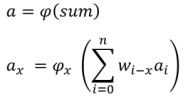

In C++ you can create your activation function. Note that “sum” is the result of Net Input Function which calculates the sum of all weighted signals. We will use some of those as a result of the input function. Here the activation value of an artificial neuron (output value) can be written by the activation function as below,

By using this sum Net Input Function Value and phi() activation functions, let’s see some of activation functions in C++.

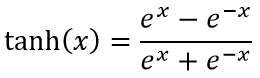

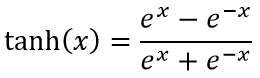

Для просмотра ссылки Войдиили Зарегистрируйся occur in the calculations of angles and distances in hyperbolic geometry, and results in range between -1 to 1. They also occur in the solutions of many linear differential equations, cubic equations, and Laplace’s equation in Cartesian coordinates. Laplace’s equations are important in many areas of physics, including electromagnetic theory, heat transfer, fluid dynamics, and special relativity. Hyperbolic function has the unique solution to the Для просмотра ссылки Войди или Зарегистрируйся f ′ = 1 − f 2, with f (0) = 0.

In addition to all these, Hyperbolic Tangent Function can be also used as a activation function as a as below,

Let’s use his in a simple ANN example.

By Yilmaz Yoru December 21, 2021

What is a hyperbolic Tangent function? How can we use Hyperbolic Tangent Function in C++? Let’s answer these questions.

What does an activation function mean in AI?

Activation Function ( phi() ) also called as transfer function, or threshold function that determines the activation value ( a = phi(sum) ) from a given value (sum) from the Net Input Function . Net Input Function, here the sum is a sum of signals in their weights, and activation function is a new value of this sum with a given function or conditions. In another term. The activation function is a way to transfer the sum of all weighted signals to a new activation value of that signal. There are different activation functions, mostly Linear (Identity), bipolar and logistic (sigmoid) functions are used. The activation function and its types are explained well Для просмотра ссылки ВойдиIn C++ you can create your activation function. Note that “sum” is the result of Net Input Function which calculates the sum of all weighted signals. We will use some of those as a result of the input function. Here the activation value of an artificial neuron (output value) can be written by the activation function as below,

By using this sum Net Input Function Value and phi() activation functions, let’s see some of activation functions in C++.

What is a Hyperbolic Tangent Function or tanh()?

The hyperbolic tangent is a trigonometric function tanh() as below,

Для просмотра ссылки Войди

In addition to all these, Hyperbolic Tangent Function can be also used as a activation function as a as below,

C++:

double phi(double sum)

{

return ( std::tanh(sum) ); // Hyperbolic Tangent Function

}Is there a simple ANN C++ Example of the Hyperbolic Tangent function?

Here is a simple ANN example with Hyperbolic Tangent Function in C++ programming language

C++:

#include <iostream>

#define NN 2 // number of neurons

class Tneuron // neuron class

{

public:

float a; // activity of each neurons

float w[NN+1]; // weight of links between each neurons

Tneuron()

{

a=0;

for(int i=0; i<=NN; i++) w[i]=-1; // if weight is negative there is no link

}

// let's define an activation function (or threshold) for the output neuron

double activation_function(double sum)

{

return ( std::tanh(sum) ); // Hyperbolic Tangent Function

}

};

Tneuron ne[NN+1]; // neuron objects

void fire(int nn)

{

float sum = 0;

for ( int j=0; j<=NN; j++ )

{

if( ne[j].w[nn]>=0 ) sum += ne[j].a*ne[j].w[nn];

}

ne[nn].a = ne[nn].activation_function(sum);

}

int main()

{

//let's define activity of two input neurons (a0, a1) and one output neuron (a2)

ne[0].a = 0.0;

ne[1].a = 1.0;

ne[2].a = 0;

//let's define weights of signals comes from two input neurons to output neuron (0 to 2 and 1 to 2)

ne[0].w[2] = 0.3;

ne[1].w[2] = 0.2;

// Let's fire our artificial neuron activity, output will be

fire(2);

printf("%10.6f\n", ne[2].a);

getchar();

return 0;

}