Starter Framework for Machine Learning Projects

Yosry Negm - 14/May/2020

Yosry Negm - 14/May/2020

[SHOWTOGROUPS=4,20]

Introduce a simplified clarification about the main steps for performing statistical learning or building machine learning model.

Let’s summarize our story here. We started with Problem statement and Algorithm selection by formulating of the task of predicting which species of iris a particular flower belongs to by using physical measurements of the flower and then we prepare our project and load the associated dataset.

We used a dataset of measurements that was annotated by an expert with the correct species to build our model, making this a supervised learning task (three-class classification problem).

After that, we start exploring and visualizing the data to understand it, then split our data to training and test sets reaching to build our model and fit it by training and then test.

Introduction

In this article, I'll demonstrate some sort of a framework for working on machine learning projects. As you may know, machine learning in general is about extracting knowledge from data therefore, most of machine learning projects will depend on a data collection - called dataset - from a specific domain on which, we are investigating a certain problem to build a predictive model suitable for it.

This model should follow certain set of steps to accomplish its purpose. In the following sections, I will practically introduce a simplified clarification about the main steps for performing statistical learning or building machine learning model.

Background

I assumed that the explanation project is implemented in Python programming language inside Jupiter Notebook (IPython) depending on using Numpy, Pandas, and Scikit-Learn packages.

Problem Statement

To build better models, you should clearly define the problem that you are trying to solve, including the strategy you will use to achieve the desired solution.

I’ve chosen a simple application of Iris Species Classification in which we will create a simple machine learning model that could be used in distinguishing the species of some iris flowers by identifying some measurements associated with each iris such as the petals’ length and width as well as sepals’ length and the width, all measured in centimetres.

We will depend on a data set of previously identified measurements by experts, the flowers have been classified into the species stosa, versicolor, or virginica. Our mission is to build a model that can learn from these measurements, so that we can predict the species for a new iris.

Algorithm Selection

Depending on the nature and characteristics of the problem under investigation, we need to select algorithms and techniques suitable for solving it. Since we have measurements for which we know the correct species of iris, this is a Supervised Learning problem.

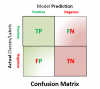

In this problem, we want to predict one of several options (the species of iris). This is an example of a classification problem. The possible outputs (different species of irises) are called classes. Every iris in the data set belongs to one of three classes, so this problem is a three-class classification problem.

The desired output for a single data point (an iris) is the species of this flower. For a particular data point, the species it belongs to is called its label or class.

Project Preparation

To begin working with our project’s data, we’ll first import the functionalities we need in our implementation such as Python libraries, setting our environment to allow us to accomplish our mission as well as loading our dataset successfully:

Then, we start loading our dataset into a pandas DataFrame. The data that will be used here is the Iris dataset, a classical dataset in machine learning and statistics. It is included in scikit-learn within datasets module.

The output will be as follows:

The data of iris flowers contains the numeric measurements of sepal length, sepal width, petal length, and petal width and is stored as Numpy array, so we have converted it to pandas Dataframe.

Note: Here we are using built-in dataset that comes with Sci-kit Learn library but in practice, the data set often comes as csv files so we could use code that looks like the following commented code:

Then we start loading our dataset into a pandas DataFrame. The data that will be used here is the Iris dataset, a classical dataset in machine learning and statistics. It is included in scikit-learn within datasets module.

The output will be as follows:

The data of iris flowers contains the numeric measurements of sepal length, sepal width, petal length, and petal width and is stored as NumPy array, so to convert it to pandas DataFrame, we will write the following code:

Data Exploration

Before building a machine learning model, we must first analyze the dataset we are using for the problem, and we are always expected to assess for common issues that may require preprocessing.

So data exploration is a necessary action that should be done before proceeding with our model implementation. This is done by showing a quick sample from the data, describing the type of data, knowing its shape or dimensions, and if needed, having basic statistics and information related to the loaded dataset, as well as exploring of input features and any abnormalities or interesting qualities about the data that may need to be addressed.

Data exploration provides you with a deeper understanding of your datasets, including Dataset schemas, Value distributions, Missing values and Cardinality of categorical features.

To start exploring our dataset represented by the iris object that is returned by load_iris() and stored in mydata pandas DataFrame, we could display the first few entries for examination using the .head() function.

To display more information about the structure of the data:

To check if there are any null values present in the dataset:

Or use the following function to get more details about these null values:

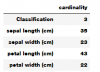

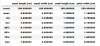

From the above sample of our dataset, we can see that the dataset contains measurements for 150 different flowers. Individual items are called examples, instances or samples in machine learning, and their properties (the five columns) are called features (four features and one column is the Target or the class for each instance or example).

The shape of the data array is the number of samples multiplied by the number of features.

This is a convention in scikit-learn, and our data will always be assumed to be in this shape.

Given below is the detailed explanation of the features and the classes contained in our dataset:

You could compare min and max and see if scale is large and there’s a need for Scaling of numerical features.

[/SHOWTOGROUPS]

Introduce a simplified clarification about the main steps for performing statistical learning or building machine learning model.

Let’s summarize our story here. We started with Problem statement and Algorithm selection by formulating of the task of predicting which species of iris a particular flower belongs to by using physical measurements of the flower and then we prepare our project and load the associated dataset.

We used a dataset of measurements that was annotated by an expert with the correct species to build our model, making this a supervised learning task (three-class classification problem).

After that, we start exploring and visualizing the data to understand it, then split our data to training and test sets reaching to build our model and fit it by training and then test.

Introduction

In this article, I'll demonstrate some sort of a framework for working on machine learning projects. As you may know, machine learning in general is about extracting knowledge from data therefore, most of machine learning projects will depend on a data collection - called dataset - from a specific domain on which, we are investigating a certain problem to build a predictive model suitable for it.

This model should follow certain set of steps to accomplish its purpose. In the following sections, I will practically introduce a simplified clarification about the main steps for performing statistical learning or building machine learning model.

Background

I assumed that the explanation project is implemented in Python programming language inside Jupiter Notebook (IPython) depending on using Numpy, Pandas, and Scikit-Learn packages.

Problem Statement

To build better models, you should clearly define the problem that you are trying to solve, including the strategy you will use to achieve the desired solution.

I’ve chosen a simple application of Iris Species Classification in which we will create a simple machine learning model that could be used in distinguishing the species of some iris flowers by identifying some measurements associated with each iris such as the petals’ length and width as well as sepals’ length and the width, all measured in centimetres.

We will depend on a data set of previously identified measurements by experts, the flowers have been classified into the species stosa, versicolor, or virginica. Our mission is to build a model that can learn from these measurements, so that we can predict the species for a new iris.

Algorithm Selection

Depending on the nature and characteristics of the problem under investigation, we need to select algorithms and techniques suitable for solving it. Since we have measurements for which we know the correct species of iris, this is a Supervised Learning problem.

In this problem, we want to predict one of several options (the species of iris). This is an example of a classification problem. The possible outputs (different species of irises) are called classes. Every iris in the data set belongs to one of three classes, so this problem is a three-class classification problem.

The desired output for a single data point (an iris) is the species of this flower. For a particular data point, the species it belongs to is called its label or class.

Project Preparation

To begin working with our project’s data, we’ll first import the functionalities we need in our implementation such as Python libraries, setting our environment to allow us to accomplish our mission as well as loading our dataset successfully:

Код:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# For Pretty display for plots in jupyter notebooks

%matplotlib inline

# And to allow the use of the function display() for pandas data frames

from IPython.display import display

# To Ignore warnings of loaded modules during runtime execution if needed

import warnings

warnings.filterwarnings("ignore")Then, we start loading our dataset into a pandas DataFrame. The data that will be used here is the Iris dataset, a classical dataset in machine learning and statistics. It is included in scikit-learn within datasets module.

Код:

from sklearn.datasets import load_iris

iris=load_iris()

# printing feature names

print('features: %s'%iris['feature_names'])

# printing species of iris

print('target categories: %s'%iris['target_names'])

# iris data shape

print("Shape of data: {}".format(iris['data'].shape))

full_data=pd.DataFrame(iris['data'],columns=iris['feature_names'])

# converting to pandas DataFrame

full_data['Classification']=iris['target']

full_data['Classification']=

full_data['Classification'].apply(lambda x: iris['target_names'][x])

# Note: Here we are using built-in dataset that comes

with Sci-kit Learn library but in practice,

# the data set often comes as csv files so we could

use a code looks like the following commented one:

# myfile='MyFileName.csv'

# full_data=pd.read_csv(myfile, thousands=',',

delimiter=';',encoding='latin1',na_values="n/a")The output will be as follows:

Код:

features: ['sepal length (cm)', 'sepal width (cm)', 'petal length (cm)', 'petal width (cm)']

target categories: ['setosa' 'versicolor' 'virginica']

Shape of data: (150, 4)The data of iris flowers contains the numeric measurements of sepal length, sepal width, petal length, and petal width and is stored as Numpy array, so we have converted it to pandas Dataframe.

Note: Here we are using built-in dataset that comes with Sci-kit Learn library but in practice, the data set often comes as csv files so we could use code that looks like the following commented code:

Код:

#myfile='MyFileName.csv'

#full_data=pd.read_csv(myfile, thousands=',', delimiter=';', encoding='latin1', na_values="n/a")Then we start loading our dataset into a pandas DataFrame. The data that will be used here is the Iris dataset, a classical dataset in machine learning and statistics. It is included in scikit-learn within datasets module.

Код:

from sklearn.datasets import load_iris

iris=load_iris()

#printing feature names

print('features: %s'%iris['feature_names'])

#printing species of iris

print('target categories: %s'%iris['target_names'])

#iris data shape

print("Shape of data: {}".format(iris['data'].shape))The output will be as follows:

Код:

features: ['sepal length (cm)', 'sepal width (cm)', 'petal length (cm)', 'petal width (cm)']

target categories: ['setosa' 'versicolor' 'virginica']

Shape of data: (150, 4)The data of iris flowers contains the numeric measurements of sepal length, sepal width, petal length, and petal width and is stored as NumPy array, so to convert it to pandas DataFrame, we will write the following code:

Код:

full_data=pd.DataFrame(iris['data'],columns=iris['feature_names'])

full_data['Classification']=iris['target']

full_data['Classification']=full_data['Classification'].apply(lambda x: iris['target_names'][x])Data Exploration

Before building a machine learning model, we must first analyze the dataset we are using for the problem, and we are always expected to assess for common issues that may require preprocessing.

So data exploration is a necessary action that should be done before proceeding with our model implementation. This is done by showing a quick sample from the data, describing the type of data, knowing its shape or dimensions, and if needed, having basic statistics and information related to the loaded dataset, as well as exploring of input features and any abnormalities or interesting qualities about the data that may need to be addressed.

Data exploration provides you with a deeper understanding of your datasets, including Dataset schemas, Value distributions, Missing values and Cardinality of categorical features.

To start exploring our dataset represented by the iris object that is returned by load_iris() and stored in mydata pandas DataFrame, we could display the first few entries for examination using the .head() function.

Код:

display(full_data.head())To display more information about the structure of the data:

Код:

full_data.info() or full_data.dtypesTo check if there are any null values present in the dataset:

Код:

full_data.isnull().sum()Or use the following function to get more details about these null values:

Код:

def NullValues(theData):

null_data = pd.DataFrame(

{'columns': theData.columns,

'Sum': theData.isnull().sum(),

'Percentage': theData.isnull().sum() * 100 / len(theData),

'zeros Percentage': theData.isin([0]).sum() * 100 / len(theData)

}

)

return null_dataFrom the above sample of our dataset, we can see that the dataset contains measurements for 150 different flowers. Individual items are called examples, instances or samples in machine learning, and their properties (the five columns) are called features (four features and one column is the Target or the class for each instance or example).

The shape of the data array is the number of samples multiplied by the number of features.

This is a convention in scikit-learn, and our data will always be assumed to be in this shape.

Given below is the detailed explanation of the features and the classes contained in our dataset:

- sepal length: Represents the length of the sepal of the specified iris flower in centimetres

- sepal width: Represents the width of the sepal of the specified iris flower in centimetres

- petal length: Represents the length of the petal of the specified iris flower in centimetres

- petal width: Represents the width of the petal of the specified iris flower in centimetres

Код:

full_data.describe()You could compare min and max and see if scale is large and there’s a need for Scaling of numerical features.

[/SHOWTOGROUPS]

Последнее редактирование: