Neon Intrinsics: Getting Started on Android

Dawid Borycki - 05/May/2020

Dawid Borycki - 05/May/2020

[SHOWTOGROUPS=4,20]

In this article, we see how to set up Android Studio for native C++ development, and to utilize Neon intrinsics for Arm-powered mobile devices.

I’m going to show you how to set up your Android development environment to use Neon intrinsics. Then, we’ll implement an Android application that uses the Android Native Development Kit (NDK) to calculate the dot product of two vectors. Finally, we’ll see how to improve the performance of such a function with NEON intrinsics.

This article is in the Product Showcase section for our sponsors at CodeProject. These articles are intended to provide you with information on products and services that we consider useful and of value to developers.

Do not repeat yourself (DRY) is one of the major principles of software development, and following this principle typically means reusing your code via functions. Unfortunately, invoking a function adds extra overhead. To reduce this overhead, compilers take advantage of built-in functions called intrinsics, where the compiler will replace the intrinsics used in the high level programming languages (C/C++) with mostly 1-1 mapped assembly instructions. To even further improve performance, you're into the realm of assembly code, but with Arm Neon intrinsics. You can often avoid the complication of writing assembly functions. Instead you only need to program in high level languages and call the intrinsics or instruction functions declared in the arm_neon.h header file.

As an Android developer, you probably don’t have the time to write assembly language. Instead, your focus is on app usability, portability, design, data access, and tuning the app to various devices. If that's the case, Neon intrinsics is going to be a big performance help.

Для просмотра ссылки Войдиили Зарегистрируйся is an advanced Для просмотра ссылки Войди или Зарегистрируйся architecture extension for Arm processors. The idea of SIMD is to perform the same operation on a sequence or vector of data during a single CPU cycle.

For instance, if you’re summing numbers from two one-dimensional arrays, you need to add them one by one. In a non-SIMD CPU, each array element is loaded from memory to CPU registers, then the register values are added and the result is stored in memory. This procedure is repeated for all elements. To speed up such operations, SIMD-enabled CPUs load several elements at once, perform the operations, then store results to memory. Performance will improve depending on the sequence length, N. Theoretically, the computation time will reduce N times.

By utilizing SIMD architecture, Neon intrinsics can accelerate the performance of multimedia and signal processing applications, including video and audio encoding and decoding, 3D graphics, and speech and image processing. Neon intrinsics provide almost as much control as writing assembly code, but they leave the allocation of registers to the compiler so developers can focus on the algorithms. Hence, Neon intrinsics strike a balance between performance improvement and the writing of assembly language.

First, I’m going to show you how to set up your Android development environment to use Neon intrinsics. Then, we’ll implement an Android application that uses the Android Native Development Kit (NDK) to calculate the dot product of two vectors. Finally, we’ll see how to improve the performance of such a function with NEON intrinsics.

I created the example project with Для просмотра ссылки Войдиили Зарегистрируйся. The sample code is available from the GitHub repository Для просмотра ссылки Войди или Зарегистрируйся. I tested the code using a Samsung SM-J710F phone.

Native C++ Android Project Template

I started by creating a new project using the Native C++ Project Template.

Then, I set the application name to Neon Intrinsics, selected Java as the language, and set the minimum SDK to API 19: Android 4.4 (KitKat).

Then, I picked Toolchain Default for the C++ Standard.

The project I created comprises one activity that’s implemented within the MainActivity class, deriving from AppCompatActivity (see app/java/com.example.neonintrinsics/MainActivity.java). The associated view contains only a TextView control that displays a “Hello from C++” string.

To get these results, you can run the project directly from Android Studio using one of the emulators. To build the project successfully, you’ll need to install CMake and the Android NDK. You do so through the settings (File | Settings). Then, you select NDK and CMake on the SDK Tools tab.

If you open the MainActivity.java file, you’ll note that the string displayed in the app comes from native-lib. This library’s code resides within the app/cpp/native-lib.cpp file. That’s the file we’ll use for our implementation.

Enabling Neon Intrinsics Support

To enable support for Neon intrinsics, you need to modify the Для просмотра ссылки Войдиили Зарегистрируйся so the app can be built for the Arm architecture. Neon has two versions: one for Armv7, Armv8 AArch32, and one for Armv8 AArch64. From an intrinsics point of view there are a few differences, such as the addition of vectors of 2xfloat64 in Armv8-A. They are all available in the arm_neon.h header file that is included in the compiler’s installation path. You also need to import the Neon libraries.

Go to the Gradle scripts, and open the build.gradle (Module: app) file. Then, supplement the defaultConfig section by adding the following statements. First, add this line to the general settings:

Here, I am adding the support for x86, 32-bit and 64-bit ARM architectures. Then add this line under the cmake options:

It should look like this:

Now you can use Neon intrinsics, which are declared within the arm_neon.h header. Note that the build will only be successful for ARM-v7 and above. To make your code compatible with x86, you can use theДля просмотра ссылки Войдиили Зарегистрируйся.

Dot Product and Helper Methods

We can now implement the dot product of two vectors using C++. All the code should be placed in the native-lib.cpp file. Note that, starting from armv8.4a, the DotProduct is part of the new instruction set. This corresponds to some cortex A75 designs and all Cortex A76 designs onwards. See Для просмотра ссылки Войдиили Зарегистрируйся for more information.

We start with the helper method that generates the ramp, which is the vector of 16-bit integers incremented from the startValue:

Next, we implement the msElapsedTime and now methods, which will be used later to determine the execution time:

The msElapsedTime method calculates the duration (expressed in milliseconds) that passed from a given start point.

The now method is a handy wrapper for the std::chrono::system_clock::now method, which returns the current time.

Now create the actual dotProduct method. As you remember from your programming classes, to calculate a dot product of two equal-length vectors, you multiply vectors element-by-element, then accumulate the resulting products. A straightforward implementation of this algorithm follows:

The above implementation uses a for loop. So, we sequentially multiply vector elements and then accumulate the resulting products in a local variable called result.

[/SHOWTOGROUPS]

In this article, we see how to set up Android Studio for native C++ development, and to utilize Neon intrinsics for Arm-powered mobile devices.

I’m going to show you how to set up your Android development environment to use Neon intrinsics. Then, we’ll implement an Android application that uses the Android Native Development Kit (NDK) to calculate the dot product of two vectors. Finally, we’ll see how to improve the performance of such a function with NEON intrinsics.

This article is in the Product Showcase section for our sponsors at CodeProject. These articles are intended to provide you with information on products and services that we consider useful and of value to developers.

Do not repeat yourself (DRY) is one of the major principles of software development, and following this principle typically means reusing your code via functions. Unfortunately, invoking a function adds extra overhead. To reduce this overhead, compilers take advantage of built-in functions called intrinsics, where the compiler will replace the intrinsics used in the high level programming languages (C/C++) with mostly 1-1 mapped assembly instructions. To even further improve performance, you're into the realm of assembly code, but with Arm Neon intrinsics. You can often avoid the complication of writing assembly functions. Instead you only need to program in high level languages and call the intrinsics or instruction functions declared in the arm_neon.h header file.

As an Android developer, you probably don’t have the time to write assembly language. Instead, your focus is on app usability, portability, design, data access, and tuning the app to various devices. If that's the case, Neon intrinsics is going to be a big performance help.

Для просмотра ссылки Войди

For instance, if you’re summing numbers from two one-dimensional arrays, you need to add them one by one. In a non-SIMD CPU, each array element is loaded from memory to CPU registers, then the register values are added and the result is stored in memory. This procedure is repeated for all elements. To speed up such operations, SIMD-enabled CPUs load several elements at once, perform the operations, then store results to memory. Performance will improve depending on the sequence length, N. Theoretically, the computation time will reduce N times.

By utilizing SIMD architecture, Neon intrinsics can accelerate the performance of multimedia and signal processing applications, including video and audio encoding and decoding, 3D graphics, and speech and image processing. Neon intrinsics provide almost as much control as writing assembly code, but they leave the allocation of registers to the compiler so developers can focus on the algorithms. Hence, Neon intrinsics strike a balance between performance improvement and the writing of assembly language.

First, I’m going to show you how to set up your Android development environment to use Neon intrinsics. Then, we’ll implement an Android application that uses the Android Native Development Kit (NDK) to calculate the dot product of two vectors. Finally, we’ll see how to improve the performance of such a function with NEON intrinsics.

I created the example project with Для просмотра ссылки Войди

Native C++ Android Project Template

I started by creating a new project using the Native C++ Project Template.

Then, I set the application name to Neon Intrinsics, selected Java as the language, and set the minimum SDK to API 19: Android 4.4 (KitKat).

Then, I picked Toolchain Default for the C++ Standard.

The project I created comprises one activity that’s implemented within the MainActivity class, deriving from AppCompatActivity (see app/java/com.example.neonintrinsics/MainActivity.java). The associated view contains only a TextView control that displays a “Hello from C++” string.

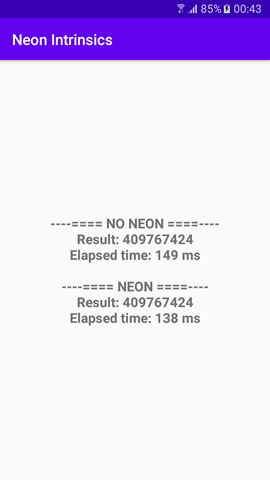

To get these results, you can run the project directly from Android Studio using one of the emulators. To build the project successfully, you’ll need to install CMake and the Android NDK. You do so through the settings (File | Settings). Then, you select NDK and CMake on the SDK Tools tab.

If you open the MainActivity.java file, you’ll note that the string displayed in the app comes from native-lib. This library’s code resides within the app/cpp/native-lib.cpp file. That’s the file we’ll use for our implementation.

Enabling Neon Intrinsics Support

To enable support for Neon intrinsics, you need to modify the Для просмотра ссылки Войди

Go to the Gradle scripts, and open the build.gradle (Module: app) file. Then, supplement the defaultConfig section by adding the following statements. First, add this line to the general settings:

Код:

ndk.abiFilters 'x86', 'armeabi-v7a', 'arm64-v8a'Here, I am adding the support for x86, 32-bit and 64-bit ARM architectures. Then add this line under the cmake options:

Код:

arguments "-DANDROID_ARM_NEON=ON"It should look like this:

Код:

defaultConfig {

applicationId "com.example.myapplication"

minSdkVersion 16

targetSdkVersion 29

versionCode 1

versionName "1.0"

ndk.abiFilters 'x86', 'armeabi-v7a', 'arm64-v8a'

testInstrumentationRunner "androidx.test.runner.AndroidJUnitRunner"

externalNativeBuild {

cmake {

cppFlags ""

arguments "-DANDROID_ARM_NEON=ON"

}

}

}Now you can use Neon intrinsics, which are declared within the arm_neon.h header. Note that the build will only be successful for ARM-v7 and above. To make your code compatible with x86, you can use theДля просмотра ссылки Войди

Dot Product and Helper Methods

We can now implement the dot product of two vectors using C++. All the code should be placed in the native-lib.cpp file. Note that, starting from armv8.4a, the DotProduct is part of the new instruction set. This corresponds to some cortex A75 designs and all Cortex A76 designs onwards. See Для просмотра ссылки Войди

We start with the helper method that generates the ramp, which is the vector of 16-bit integers incremented from the startValue:

Код:

short* generateRamp(short startValue, short len) {

short* ramp = new short[len];

for(short i = 0; i < len; i++) {

ramp[i] = startValue + i;

}

return ramp;

}Next, we implement the msElapsedTime and now methods, which will be used later to determine the execution time:

Код:

double msElapsedTime(chrono::system_clock::time_point start) {

auto end = chrono::system_clock::now();

return chrono::duration_cast<chrono::milliseconds>(end - start).count();

}

chrono::system_clock::time_point now() {

return chrono::system_clock::now();

}The msElapsedTime method calculates the duration (expressed in milliseconds) that passed from a given start point.

The now method is a handy wrapper for the std::chrono::system_clock::now method, which returns the current time.

Now create the actual dotProduct method. As you remember from your programming classes, to calculate a dot product of two equal-length vectors, you multiply vectors element-by-element, then accumulate the resulting products. A straightforward implementation of this algorithm follows:

Код:

int dotProduct(short* vector1, short* vector2, short len) {

int result = 0;

for(short i = 0; i < len; i++) {

result += vector1[i] * vector2[i];

}

return result;

}The above implementation uses a for loop. So, we sequentially multiply vector elements and then accumulate the resulting products in a local variable called result.

[/SHOWTOGROUPS]