Face Detection on Android and iOS

September 11, 2017 Erik van Bilsen

September 11, 2017 Erik van Bilsen

[SHOWTOGROUPS=4,20]

Android and iOS have built-in support for detecting faces in photos. We present a small platform-independent abstraction layer to access this functionality through a uniform interface.

You can find the Для просмотра ссылки Войдиили Зарегистрируйся on GitHub as part of our Для просмотра ссылки Войди или Зарегистрируйся repository.

Like we did in our post on Для просмотра ссылки Войдиили Зарегистрируйся, we expose the functionality through a Delphi object interface. The implementation of this interface is platform-dependent. This is a common way to hide platform-specific details, and one of the ways we discussed in our post on Для просмотра ссылки Войди или Зарегистрируйся.

IgoFaceDetector API

The interface is super simple:

There is only one method. You provide it with a bitmap of a photo that may contain one or more faces, and it returns an array of TgoFace records for each detected face. This record is also pretty simple:

It contains a rectangle into the bitmap that surrounds the face, as well as the position in the bitmap of the two eyes and the distance between the eyes (in pixels). Sometimes, one or both eyes cannot be reliably detected. In that case, the positions will be (0, 0) and the distance between the eyes will be 0.

To create a face detector, call the TgoFaceDetector.Create factory function:

The parameters are optional. The AAccuracy parameter lets you trade off face detection accuracy for speed. You can choose between Low and High accuracy, which will be faster and slower respectively. This parameter is only used for iOS. On Android, you cannot specify an accuracy. Also, you can specify the maximum number of faces to detect in a photo using the AMaxFaces parameter. Again, higher values increase detection time. If you want to do real-time face detection using the built-in camera, then you probably want to set this parameter to 1.

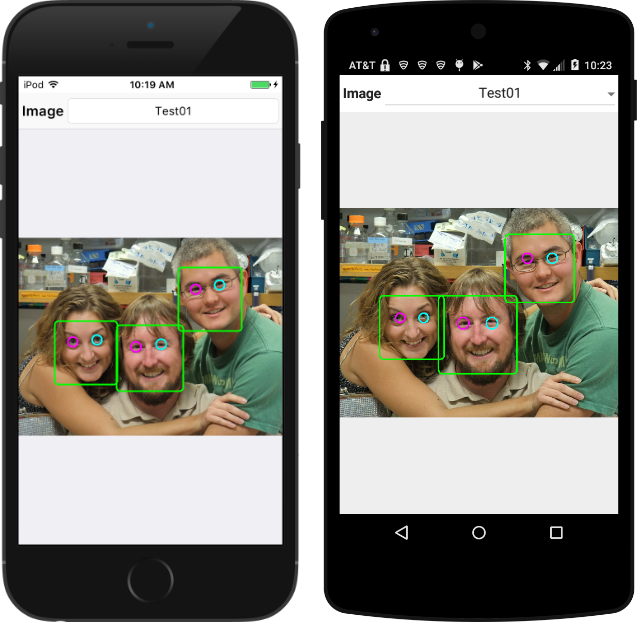

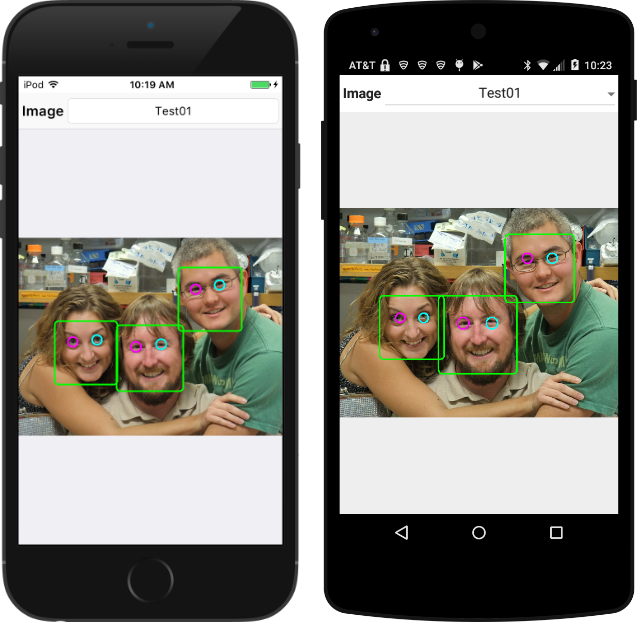

That’s all there is to it. Our GitHub repository has a little Для просмотра ссылки Войдиили Зарегистрируйся that runs the face detector on a set of photographs. This is what the result looks like on iOS and Android:

The face detection capabilities are different for both platforms. In general, the iOS version is able to detect more faces (or with higher accuracy) than the Android version.

Null Implementation

This post is about face detection on Android and iOS only since they have built-in support this. On other platforms, you can use libraries like Для просмотра ссылки Войдиили Зарегистрируйся the create similar functionality, but that is not as straight-forward and outside the scope of this article.

However we don’t want you to add {$IFDEF‘s everywhere in your code to exclude the face detector on other platforms. So, there is also a null (or no-op) implementation of the IgoFaceDetector interface that does nothing and just returns an empty array of faces:

This implementation is used for all platforms except iOS and Android.

iOS Implementation

On iOS, face detection functionality is available as part of the CoreImage framework. You need to create two objects: one of type CIContext and one of type CIDetector:

The context is created with default options using the contextWithOptions “class” function. Whenever you create an Objective-C object using one of these “class” functions (instead of using a constructor), then the returned object is a so-called auto-release object. This means that the object will be destroyed automatically at some point unless you retain a reference to it. So we need to call retain to keep the object alive, and call release once we no longer need the object (which is done inside the destructor).

A similar model is used to create the detector object. You pass a dictionary with detection options. In this case, the dictionary contains just a single option with the detection accuracy. You also need to specify the type of detector you require. In our example, we want a face detector, but you can also create a detector to detect text or QR codes for example.

The code to actually detect the faces is a bit more involved, but not too bad:

The first step is to convert the given FireMonkey bitmap to a CoreImage bitmap that the detector can handle. This involves creating an NSData object with the raw bitmap data, and passing that data to the imageWithBitmapData “class” function of CIImage.

[/SHOWTOGROUPS]

Android and iOS have built-in support for detecting faces in photos. We present a small platform-independent abstraction layer to access this functionality through a uniform interface.

You can find the Для просмотра ссылки Войди

Like we did in our post on Для просмотра ссылки Войди

IgoFaceDetector API

The interface is super simple:

| 1 2 3 4 | type IgoFaceDetector = interface function DetectFaces(const ABitmap: TBitmap): TArray<TgoFace>; end; |

There is only one method. You provide it with a bitmap of a photo that may contain one or more faces, and it returns an array of TgoFace records for each detected face. This record is also pretty simple:

| 1 2 3 4 5 6 7 | type TgoFace = record Bounds: TRectF; LeftEyePosition: TPointF; RightEyePosition: TPointF; EyesDistance: Single; end; |

To create a face detector, call the TgoFaceDetector.Create factory function:

| 1 2 3 4 5 6 7 | type TgoFaceDetector = class // static public class function Create( const AAccuracy: TgoFaceDetectionAccuracy = TgoFaceDetectionAccuracy.High; const AMaxFaces: Integer = 5): IgoFaceDetector; static; end; |

That’s all there is to it. Our GitHub repository has a little Для просмотра ссылки Войди

The face detection capabilities are different for both platforms. In general, the iOS version is able to detect more faces (or with higher accuracy) than the Android version.

Null Implementation

This post is about face detection on Android and iOS only since they have built-in support this. On other platforms, you can use libraries like Для просмотра ссылки Войди

However we don’t want you to add {$IFDEF‘s everywhere in your code to exclude the face detector on other platforms. So, there is also a null (or no-op) implementation of the IgoFaceDetector interface that does nothing and just returns an empty array of faces:

| 1 2 3 4 5 6 7 8 9 10 11 12 | type TgoFaceDetectorImplementation = class(TInterfacedObject, IgoFaceDetector) protected { IgoFaceDetector } function DetectFaces(const ABitmap: TBitmap): TArray<TgoFace>; end; function TgoFaceDetectorImplementation.DetectFaces( const ABitmap: TBitmap): TArray<TgoFace>; begin Result := nil; end; |

iOS Implementation

On iOS, face detection functionality is available as part of the CoreImage framework. You need to create two objects: one of type CIContext and one of type CIDetector:

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | constructor TgoFaceDetectorImplementation.Create( const AAccuracy: TgoFaceDetectionAccuracy; const AMaxFaces: Integer); var Options: NSDictionary; Value: Pointer; begin inherited Create; FMaxFaces := AMaxFaces; FContext := TCIContext.Wrap(TCIContext.OCClass.contextWithOptions(nil)); FContext.retain; if (AAccuracy = TgoFaceDetectionAccuracy.Low) then Value := CIDetectorAccuracyLow else Value := CIDetectorAccuracyHigh; Options := TNSDictionary.Wrap(TNSDictionary.OCClass.dictionaryWithObject( Value, CIDetectorAccuracy)); FDetector := TCIDetector.Wrap(TCIDetector.OCClass.detectorOfType( CIDetectorTypeFace, FContext, Options)); FDetector.retain; end; |

A similar model is used to create the detector object. You pass a dictionary with detection options. In this case, the dictionary contains just a single option with the detection accuracy. You also need to specify the type of detector you require. In our example, we want a face detector, but you can also create a detector to detect text or QR codes for example.

The code to actually detect the faces is a bit more involved, but not too bad:

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 | function TgoFaceDetectorImplementation.DetectFaces( const ABitmap: TBitmap): TArray<TgoFace>; var ... begin { Create a CIImage with the contents of our FireMonkey bitmap. } if (not ABitmap.Map(TMapAccess.Read, SrcData)) then Exit(nil); try Data := TNSData.Wrap(TNSData.OCClass.dataWithBytes(SrcData.Data, SrcData.Width * SrcData.Height * SrcData.BytesPerPixel)); finally ABitmap.Unmap(SrcData); end; { Now we can create a CIImage using this data. } Size.width := ABitmap.Width; Size.height := ABitmap.Height; Format := kCIFormatBGRA8; Image := TCIImage.Wrap(TCIImage.OCClass.imageWithBitmapData(Data, ABitmap.Width * 4, Size, Format, nil)); { Pass the image to the face detector. } Features := FDetector.featuresInImage(Image, nil); if (Features = nil) then Exit(nil); { Convert the CIFaceFeature objects to TgoFace records. } Count := Min(Features.count, FMaxFaces); SetLength(Result, Count); for I := 0 to Count - 1 do begin SrcFeature := TCIFaceFeature.Wrap(Features.objectAtIndex(I)); { Calculate the face bounds. } R := SrcFeature.bounds; DstFace.Bounds.Left := R.origin.x; DstFace.Bounds.Top := ABitmap.Height - R.origin.y - R.size.height; DstFace.Bounds.Width := R.size.width; DstFace.Bounds.Height := R.size.height; { Convert the eye positions. } if (SrcFeature.hasLeftEyePosition) then begin P := SrcFeature.leftEyePosition; DstFace.LeftEyePosition := PointF(P.x, ABitmap.Height - P.y); end else DstFace.LeftEyePosition := PointF(0, 0); if (SrcFeature.hasRightEyePosition) then begin P := SrcFeature.rightEyePosition; DstFace.RightEyePosition := PointF(P.x, ABitmap.Height - P.y); end else DstFace.RightEyePosition := PointF(0, 0); { Calculate the distance between the eyes manually. } DstFace.EyesDistance := DstFace.LeftEyePosition.Distance(DstFace.RightEyePosition); Result := DstFace; end; end; |

The first step is to convert the given FireMonkey bitmap to a CoreImage bitmap that the detector can handle. This involves creating an NSData object with the raw bitmap data, and passing that data to the imageWithBitmapData “class” function of CIImage.

Next, the image is passed to the featuresInImage method of the detector, which returns an NSArray of CIFaceFeature objects. The last half of the code above just converts these CIFaceFeature objects to TgoFace records. To only caveat is that CIImage objects store bitmaps in bottom-up order, so they are upside-down compared to FireMonkey bitmaps. So in the code above, vertical coordinates are subtracted from the bitmap height to compensate for this.This is another case where we use “class” functions to create Objective-C objects. But in this case, the NSData and CIImage objects are only used for the duration of the DetectFaces method. So we don’t have to use retain (and release) to keep the objects alive.

[/SHOWTOGROUPS]